“Can’t I just use ChatGPT to predict my sales instead of buying another platform?”

If you’re in sales, marketing, or RevOps, you’ve probably asked this question (or had your CFO ask it). It’s a fair question – generative AI tools seem magical, answer everything, and cost way less than specialized software. But here’s the thing: asking generative AI to do predictive analytics is like asking a brilliant English professor to perform brain surgery. They’re both incredibly smart, but one is built for language while the other requires precision with completely different tools.

Let’s dive into why generative AI and predictive analytics platforms are as different as a translator and a financial calculator – both incredibly useful tools, but you wouldn’t use Google Translate to calculate your quarterly revenue projections.

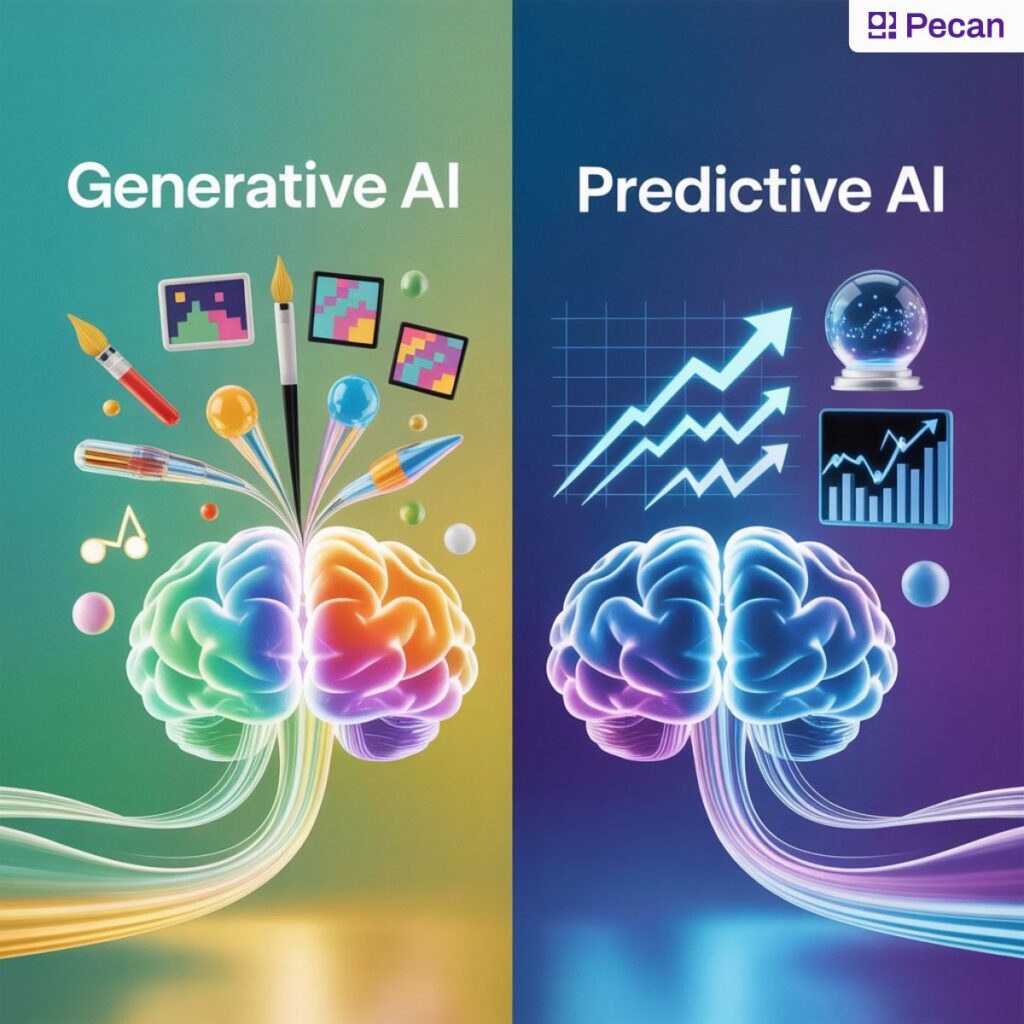

The fundamental difference: language vs. numbers

Generative AI tools like ChatGPT, Claude, Gemini, Grok, and Copilot are all Large Language Models (LLMs), which means they’re essentially the world’s most sophisticated autocomplete systems (no disrespect, just making a point). They predict what word should come next in a sentence based on patterns they learned from billions of text examples. When you ask them a question, they’re generating the most statistically likely response based on language patterns – not performing mathematical calculations.

Think of it this way: Generative AI is like that friend who’s read everything and can talk intelligently about any topic at a dinner party. But when you need to know whether to launch your product in Q2 or Q3 based on seasonal demand patterns, market trends, and customer behavior data, you don’t want dinner party conversation – you need precision analysis on YOUR proprietary business data.

Where generative AI falls short: the technical reality made simple

Recent testing by data scientists has revealed some eye-opening limitations when any generative AI tool – whether it’s ChatGPT, Claude, or Gemini – attempts predictive analytics. Here’s what happens when you ask these language models to analyze your business data:

Data restructuring disasters

What data restructuring means: Before any prediction can happen, your messy real-world data needs to be organized into a format that models can understand. It’s like organizing a chaotic closet before you can find the right outfit.

Generative AI’s problem: LLMs consistently fail at this crucial first step. When researchers asked various AI assistants to sample data weekly and include only “active users,” they completely missed both requirements. Instead of organizing the data properly, they introduced sampling bias – essentially like organizing your closet by throwing all the red items in one corner regardless of whether they were shirts, socks, or pants. This isn’t a ChatGPT problem – it’s a fundamental limitation of how language models process structured data.

Data cleaning chaos

What data cleaning means: Real business data is messy – missing values, duplicates, outliers, and errors that would throw off predictions. Data cleaning is like proofreading a report before sending it to your CEO, except there are thousands of potential errors to catch.

Generative AI’s problem: Language models don’t know what to look for. Unlike specialized platforms that have built-in quality controls, generative AI tools might miss critical data issues or, worse, create new ones. Whether you’re using Claude, Grok, or any other LLM, they’re all trained on text, not on identifying data quality issues. It’s like having someone proofread your report who doesn’t know industry terminology – they might fix grammar while missing that you wrote “Q4 revenue” when you meant Q3.

Feature engineering failures

What feature engineering means: This is where you take raw data and transform it into meaningful patterns a model can learn from. For example, instead of just using “date of purchase,” you might create features like “days since last purchase” or “purchase frequency trend.” It’s like taking basic ingredients and preparing them specifically for a recipe.

Generative AI’s problem: LLMs don’t understand the recipe. When researchers tested generative AI against traditional models (SARIMAX, XGBoost, LSTM), the language models performed worst among all approaches. They couldn’t identify the seasonal patterns, cyclical relationships, and business-specific trends that specialized models caught easily. This applies whether you’re using Microsoft’s Copilot for data analysis or asking Gemini to forecast your inventory – they’re all speaking the wrong language for this task.

Model training troubles

What model training and testing means: This is where the system learns patterns from historical data, then gets tested on separate data to ensure it can predict accurately. Think of it like training for a sport – you practice with a coach (training data), then compete in a game (test data) to see if you’ve really learned the skills.

Generative AI’s problem: Every LLM is playing the wrong sport entirely. These models were trained to predict words, not numbers. Research shows generative AI gives incorrect programming answers 52% of the time and struggles with the mathematical reasoning required for reliable predictions. It doesn’t matter if you’re using the latest GPT model or Claude’s most advanced version – it’s like asking a tennis player to suddenly play golf. The fundamental skills don’t transfer.

The precision problem: why “close enough” isn’t good enough

This is where things get serious for business applications. All generative AI tools are probabilistic, meaning their outputs vary each time you ask the same question. That’s fine for creative writing or brainstorming, but imagine if your sales forecasts were different every time you ran them. Your Q4 planning would be chaos.

This variability isn’t a bug – it’s a feature of how LLMs work – they’re all designed to generate diverse, creative responses. That’s their strength for content creation but their weakness for business predictions.

Specialized predictive analytics platforms like Pecan are built with business reliability in mind. They provide:

- Consistent, reproducible results that your team can count on

- Built-in safeguards for example, against data leakage (accidentally using future information to predict the past – a rookie mistake that can cost companies millions)

- Production-ready models that handle big data efficiently without breaking

- Industry-specific expertise that understands your business context

When the stakes are high, expertise matters

Think about the last time you needed to make a major business decision – launch a new product, adjust pricing strategy, or plan inventory for peak season. These decisions affect revenue, team resources, and company growth. You wouldn’t base them on a tool that gives different answers each time, struggles with data preparation, and performs worse than alternatives in controlled testing.

Generative AI excels at what it was designed for: helping you understand concepts, generating initial code snippets, and explaining data insights in plain English. Tools like ChatGPT, Claude, and Copilot are incredible companions to your analytics work. But for the predictions that drive your business strategy, you need tools built specifically for that purpose.

The bottom line: right tool for the right job

Asking generative AI to replace your predictive analytics platform is like asking Microsoft Word to replace your CRM – both handle information, but they serve completely different purposes. The future isn’t about choosing between generative AI and specialized platforms; it’s about using both intelligently.

Continue using generative AI for what it does best: explaining complex concepts, helping with initial data exploration, creating reports, and making insights more accessible to your team.

Start using specialized predictive analytics platforms for what they do best: reliable, accurate, business-critical predictions that your revenue targets depend on.

Your business deserves predictions backed by mathematical precision, not statistical word guessing. The good news? You don’t have to choose between accessibility and accuracy anymore – Pecan combines the precision of specialized analytics with interfaces that business users can actually understand and trust.

Ready to experience the difference that purpose-built predictive analytics can make for your business? Book a demo with Pecan today and see how real predictive analytics compares to trying to make generative AI do math. Your future forecasts (and your CFO) will thank you.