Data preparation is critical in any machine learning project because it directly influences your model's performance and accuracy.

Yet it's often overlooked or misunderstood.

In this guide, you’ll explore what data preparation is and why it's essential for successful machine learning outcomes. You'll also find some common misconceptions that could set your progress back.

Most importantly, you’ll get a detailed, step-by-step guide to navigate the data preparation process effectively.

Ready to get going? Let's start.

What is data preparation for machine learning?

Data preparation for machine learning is the process of cleaning, transforming, and organizing raw data into a format that machine learning algorithms can understand.

To break it down:

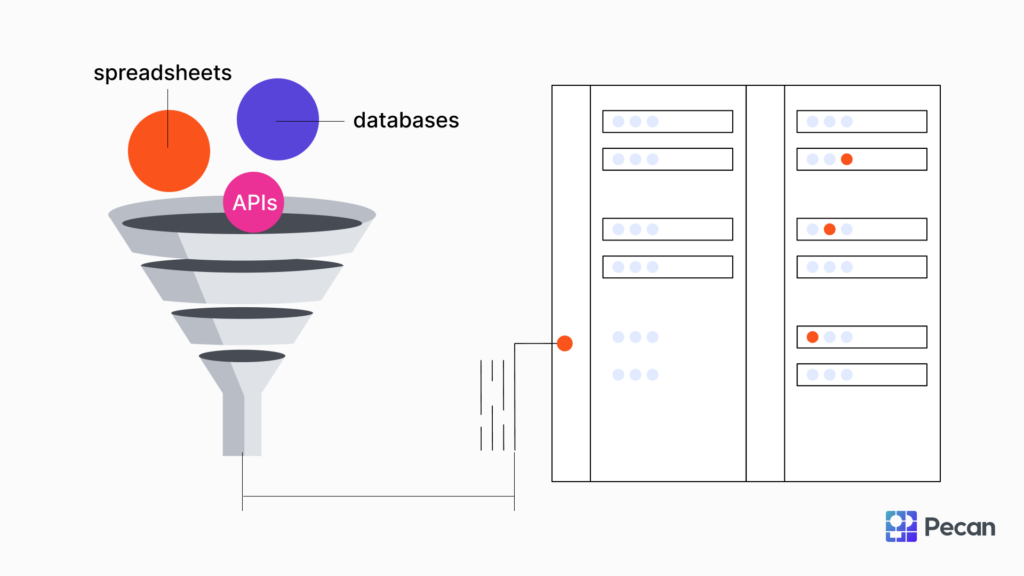

- You start with collecting data from various sources like databases, spreadsheets, or APIs.

- Next, you clean the data by removing or correcting missing values, outliers, or inconsistencies.

- Then, you transform the data through processes like normalization and encoding to make it compatible with machine learning algorithms.

- Finally, you reduce the data's complexity without losing the information it can provide to the machine learning model, often using techniques like dimensionality reduction.

Preparing data is a continuous process rather than a one-time task. As your model evolves or as you acquire new data, you'll need to revisit and refine your data preparation steps.

Why is data preparation for machine learning important?

In machine learning, the algorithm learns from the data you feed it. And the algorithm can only learn effectively if that data is clean and complete.

Without well-prepared data, even the most advanced algorithms can produce inaccurate or misleading results.

For example, missing values for customer engagement metrics or website traffic data outliers can distort your marketing model's predictions. Similarly, if your data is unbalanced — say, it's heavily skewed toward one customer demographic — that can lead to biased marketing strategies that don't generalize well to your entire customer base.

Poorly prepared data can also lead to overfitting, where the model performs well on the training data but poorly on new, unseen data. This makes the model less useful in real-world applications.

Common misconceptions about data preparation

There are myths about data preparation that can set back your machine-learning efforts. If you follow misconceptions, you may waste your time and experience poor model performance.

So let's break down these myths and align them with reality.

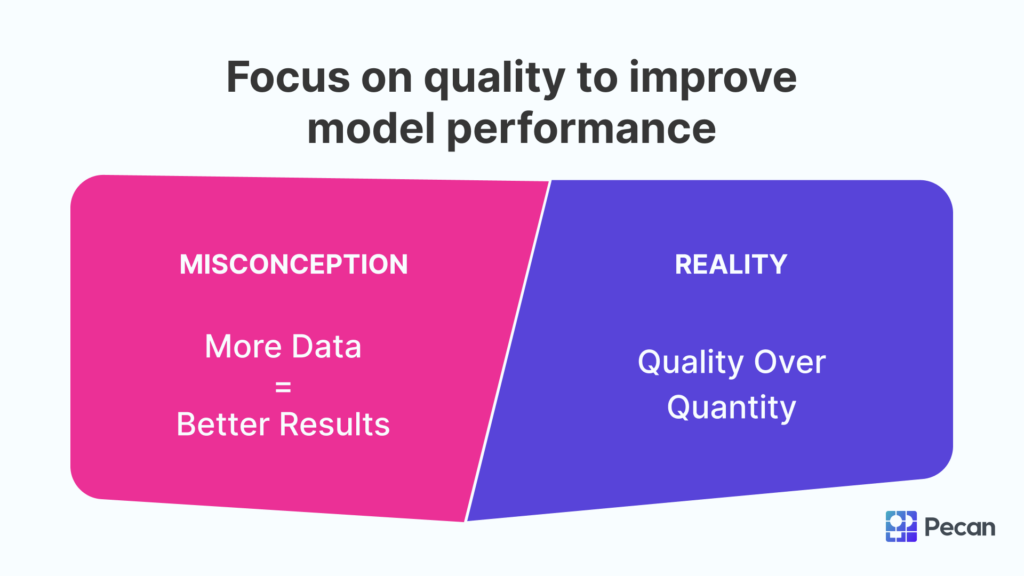

Misconception 1: More data is always better

One of the biggest misconceptions is that collecting more data will automatically improve your machine-learning model.

The reality, however, is quite different: quality over quantity. Simply storing more data won't help if that data is noisy, irrelevant, or full of errors.

The reason this misconception is so harmful is because it leads to wasted resources. Companies may spend time and money collecting and storing huge amounts of data that don't contribute to better model performance.

Sometimes, the noise from irrelevant or poor-quality data can even degrade a model's performance, making it less accurate and reliable.

Misconception 2: Data preparation is a one-time task

Another common myth is thinking that data preparation in machine learning is a one-time task — something you do once and then forget about.

The reality is that data preparation is an ongoing process. As your machine learning model evolves or new data becomes available, you'll often find that you need to revisit and refine your data preparation steps.

Believing that data preparation is a one-off task can lead to outdated data, affecting your machine-learning model's accuracy in the long term.

Misconception 3: Manual data preparation is always better

Most people believe that manual data preparation methods are the gold standard.

And it's true that manual oversight can catch nuances automated tools might miss. However, relying only on manual methods has its drawbacks. Handling data preparation manually can be time-consuming and prone to human error, slowing the entire machine-learning process from data gathering to model deployment.

Automated tools can efficiently handle many aspects of data preparation, often more quickly and with fewer errors than a manual approach.

Pecan is changing the game here by automatically preparing raw and messy data for AI models. It builds and evaluates predictive models behind the scenes, using metrics that matter most to your business.

Predictions are ready in days, not months, and Pecan regularly offers updated predictions on fresh data through automated scheduling.

This level of automation speeds up the data preparation process and greatly reduces the risk of human error.

Step-by-step guide to data preparation for machine learning

Think of data preparation as laying the groundwork for your machine-learning model.

Each step is designed to refine your data, making it a reliable input for accurate and insightful predictions.

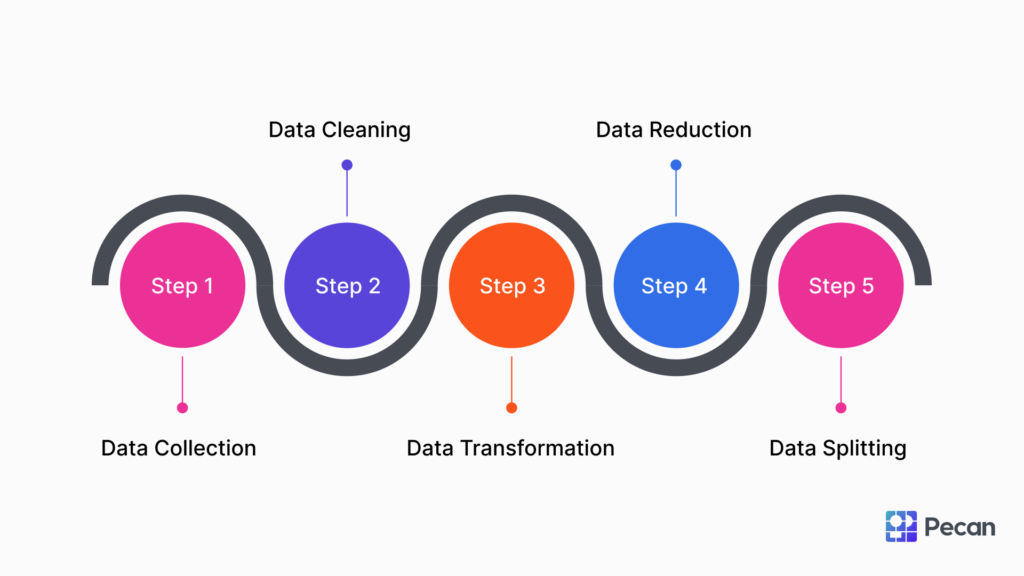

Step 1: Collecting data

The first step in data preparation for machine learning is collecting the data you'll need for your model.

The sources of this data can vary widely depending on your project's requirements. You might pull data from databases, APIs, spreadsheets, or even scrape it from websites. Some projects may also require real-time data streams.

It's important to ensure the data you collect is relevant to the problem you're trying to solve. Irrelevant or low-quality data can lead to poor model performance, so be selective and focused in your data collection efforts.

Step 2: Cleaning data

Once you've collected your data, the next step is to clean it. Here you’ll need to identify and handle missing values, outliers, and inconsistencies in the dataset.

Let’s break down each component.

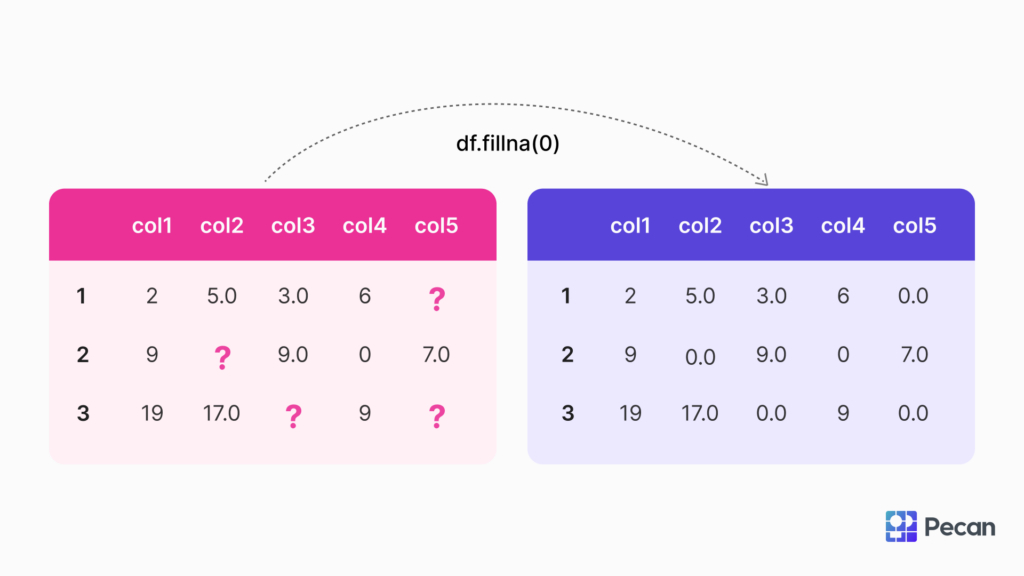

Handling missing values

Missing values happen when certain numerical values are blank in your dataset. Missing data can be a tricky issue, but there are several ways to handle it.

Imputation is one such method where you replace missing values with estimated ones. The goal is to guess the missing value based on other available information.

For example, suppose you're working with time-series data where continuity and sequence are important. In that case, you might opt for imputation methods like forward-fill or backward-fill to replace missing numeric values.

If you're dealing with a dataset where missing values are random and don't follow a pattern, you could replace missing numeric values with the mean or median of the column.

In some cases, particularly when the missing data could introduce bias, it might be better to delete those rows. For instance, if you're analyzing a marketing campaign and critical data like click-through rates or conversion rates are missing, it might be more accurate to remove those records to avoid biased analysis.

Handling outliers

Outliers are data points that are significantly different from the rest of the data. For example, in a marketing context, these could be unusually high website traffic on a particular day or a large purchase amount.

These outliers can skew your analysis and lead to incorrect conclusions.

One technique to identify outliers is z-score normalization. Z-score normalization is a statistical method that calculates how many standard deviations a data point is from the mean of the dataset. In simpler terms, it helps you understand how "abnormal" a particular data point is compared to the average. A z-score above 3 or below -3 usually indicates an outlier.

Once you've identified outliers using z-score normalization, you have a few options. You can remove them to prevent them from skewing your model or cap them at a certain value to reduce their impact. This decision often depends on the specific context and goals of your marketing analysis.

Handling inconsistencies

Inconsistencies in your data can throw off your analysis and result in misleading information.

In the marketing context, this could range from inconsistent naming in your customer database to conflicting metrics across different analytics platforms.

For example, if you're tracking customer interactions across multiple channels like email, social media, and your website, inconsistent naming or tagging can make it difficult to aggregate this data into a single customer view.

To fix this, you can employ domain-specific rules that standardize naming or metrics to correct these inconsistencies.

For instance, you might create a rule that automatically changes all instances of "e-mail" and "Email" to a standard "email" in your database.

Data validation techniques can also be helpful here. You could set up automated checks that flag inconsistencies or anomalies, allowing you to correct them before they impact your analysis.

By taking the time to address these inconsistencies, you improve the quality of your current analysis and set the stage for more accurate and insightful analyses in the future.

Step 3: Data transformation

Transforming your data is crucial because how you prepare it will directly impact how well your model can learn from it.

Data transformation is the process of converting your cleaned data into a format suitable for machine learning algorithms. This often involves feature scaling and encoding, among other techniques.

Feature scaling

In marketing data, you might have variables on different scales, like customer age and monthly spending. Feature scaling helps to normalize these variables so that one doesn't disproportionately influence the model. Methods like min-max scaling or standardization are commonly used for this.

Feature encoding

Categorical values, such as customer segments or product categories, must be converted to numerical format. Feature encoding techniques like one-hot encoding or label encoding can be used to transform these categorical variables into a numeric form that can be fed into machine learning algorithms, though they will still need to be designated and treated as categorical variables for modeling purposes.

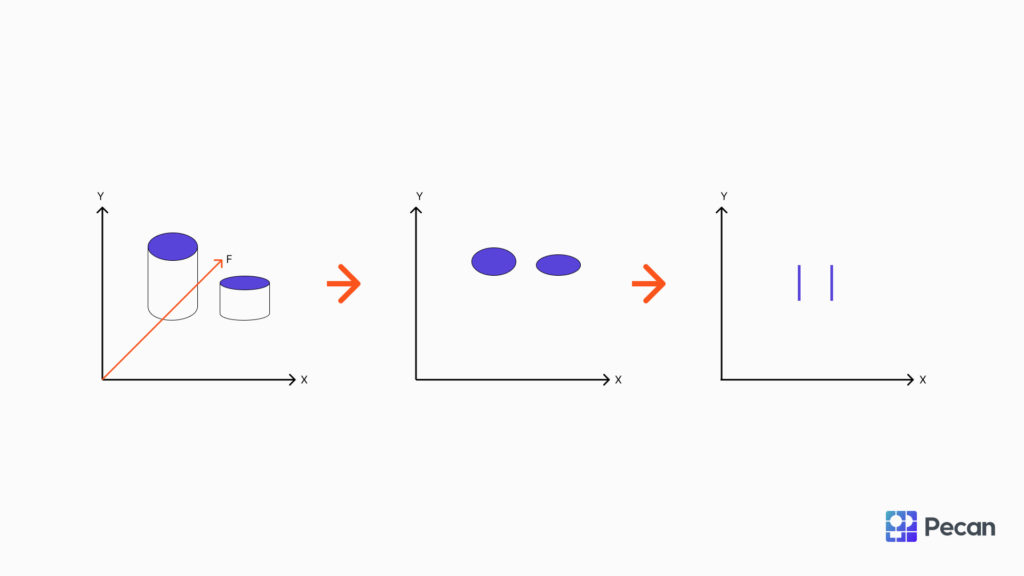

Step 4: Data reduction

Simplifying your data helps your machine learning model spot patterns more easily, offering quick and accurate marketing information for timely decisions.

Data reduction is the process of simplifying your data without losing its essence. This is particularly useful in marketing, where you often deal with large datasets that can be cumbersome to analyze.

Data reduction techniques can make your datasets more manageable and speed up your machine-learning algorithms without sacrificing model performance.

One common method is dimensionality reduction, which reduces the number of target variables in your entire dataset while preserving its key features.

For example, if you have customer data with numerous variables like age, income, spending habits, and more, dimensionality reduction can help you focus on the most impactful variables.

https://www.youtube.com/watch?v=3uxOyk-SczU

Step 5: Data splitting

The last step in preparing your data for machine learning is splitting it into different sets: training, validation, and test sets.

Correctly splitting your data ensures your machine learning model can generalize well to new data, making your marketing data more reliable and actionable.

A common practice is using a 70-30 or 80-20 ratio for training and test sets. The training set is used to train the model, and the test set is used to evaluate it. Some also use a validation set, a subset of the training set, or a separate set to fine-tune model parameters.

It's essential to ensure each set represents the overall data. For example, if you're analyzing customer segments, make sure each set contains a good mix of different segments to avoid bias.

https://www.youtube.com/watch?v=_vdMKioCXqQ

Ready to prepare your data for machine learning?

Data preparation is essential for effective machine learning models. It involves crucial steps like cleaning, transforming, and splitting your data.

Automated tools like Pecan can streamline this process, making it easier to turn raw data into actionable information. The end goal is a successful marketing campaign built on predictions generated through well-prepared data.

If you want to leverage machine learning for more effective business decisions, speak with a data preparation expert from Pecan today.

Want to boost your data analysis? Sign up for a free trial to gain more data-driven insights.