In the complex world of AI and data science, asking simple, pointed questions can help guide an AI initiative to actual bottom-line results. There is much hype about the economy-transforming information revolution and AI/machine learning technologies. But assessing how to bring AI to business doesn’t require an understanding of the umbrella of AI disciplines. While many are groundbreaking, most don’t help drive revenue.

A simple question can be a litmus test for businesses looking to either buy an AI platform or hire a team of data scientists.

Is the value worth the cost?

From what we’ve seen at Pecan, this question can be hard to answer for two reasons:

(1) Business leaders aren’t sure what outputs from predictive models should look like. They don’t know what business value to expect or demand.

(2) Business leaders are often tragically optimistic about the total and ongoing costs of building data science in-house.

Let’s talk about the costs of building in-house data science.

The Cost of Building Data Science in House: 7 Reasons That Add Up

1. Hiring and Retaining Data Scientists is Expensive

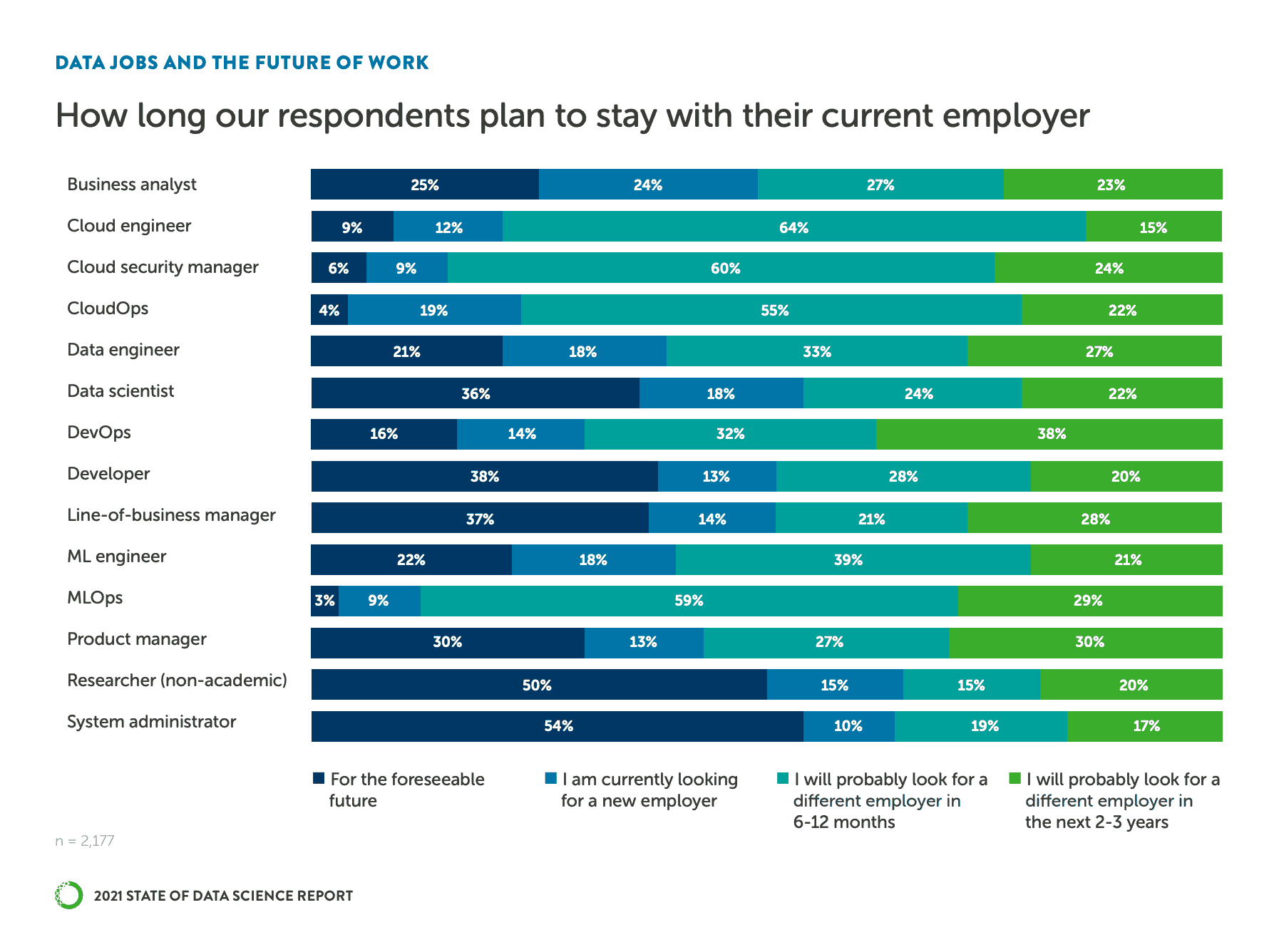

Buyers should beware of the imbalanced market for data scientists. Demand has created astronomical salaries and short tenures. Monetary costs can range from hundreds of thousands to millions of dollars. Businesses struggle to structure expensive teams with no prevailing model for data science (outside the Center of Excellence popularized by massive tech companies).

Because it’s easy for data scientists to find another position, there’s little pressure to guarantee results. Most predictive platforms specialize in use cases like customer churn or lifetime value in order to guarantee statistical and technological reliability. Of course, they also skip the expensive chaos of hiring and structuring a team.

2. Data Science is Disconnected from Business

The data science disconnect means AI and analytics teams are fundamentally disconnected from driving results on the business problem. Importantly, they also aren’t accountable for those results.

For example, customer churn may be just another metric for an analytics team that reports on financial, transaction, legal and other critical data. On the other hand, for the Customer Experience team, it’s everything. That’s their entire job.

That’s why at Pecan we’ve seen Customer Experience teams come to us directly, looking to predict customer churn and retention. Then, they can say they did everything possible to optimize their most critical KPI.

3. Liaising With Business is a Full-Time Job

For reporting on predictions, meeting at monthly intervals or in QBRs is too infrequent to create ongoing impact. If you’re predicting for a time horizon of 1 week, the business needs these predictions delivered every week. Additionally, they may request changes or drilling down into results.

The data science disconnect is a pervasive problem. It’s only bridged by business teams who can ping, manage, and follow up incessantly. Too often, we see models trained and deployed that aren’t optimizing for the right KPI, or they prioritize the wrong treatments simply because data science lacks perspective on the common-sense realities of business operations.

4. Manual Time-to-Value, Emphasis on Time

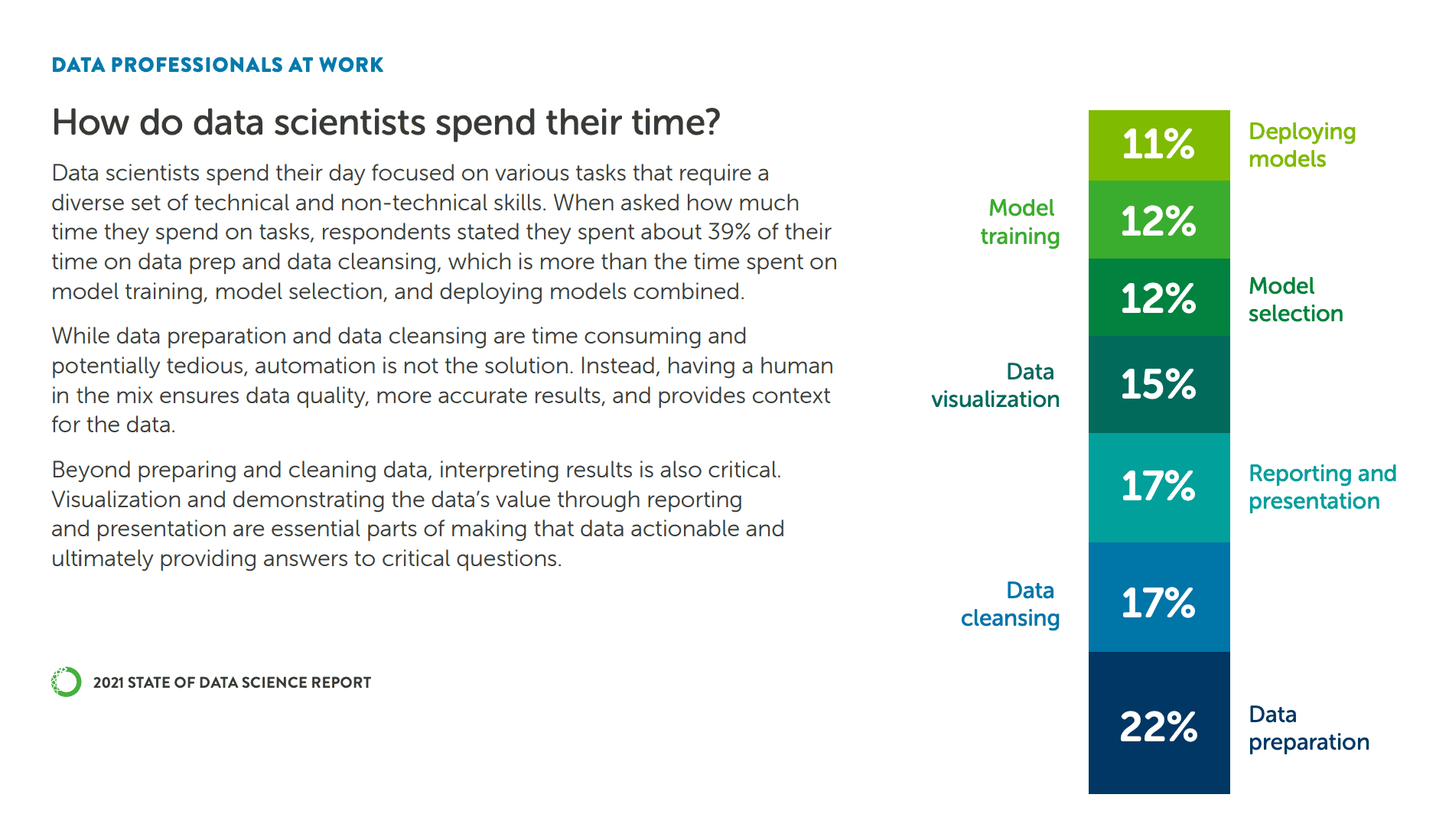

Once you’ve gotten past hiring data scientists and aligning them with the parts of the business they’ll be accountable to (or vice versa?), there’s the challenge of actual work. Automation is key to speed. Data preparation takes up 45% of data scientists’ working time, while data visualization is another 21%. (That’s why we’ve automated data exploration to speed data prep.) This process can take months, and any part can hit a snag.

5. MLOps Isn't Part of the Job Description

Additionally, there’s the fact that MLOps isn’t part of the job description for in-house data science. Once models are trained and creating outputs, and those outputs are validated and shown to have impact on the business, the work doesn’t end. Then there comes the onerous task of connecting outputs with the systems your business teams use to do their jobs. This important step is why there’s a growing range of job titles like ML Engineer and MLOps Manager, involving their own special skill sets and experience — and incurring additional costs. Automating this process is feasible today with platforms designed to integrate with your business’s systems of record.

6. Building Applications Requires Maintenance

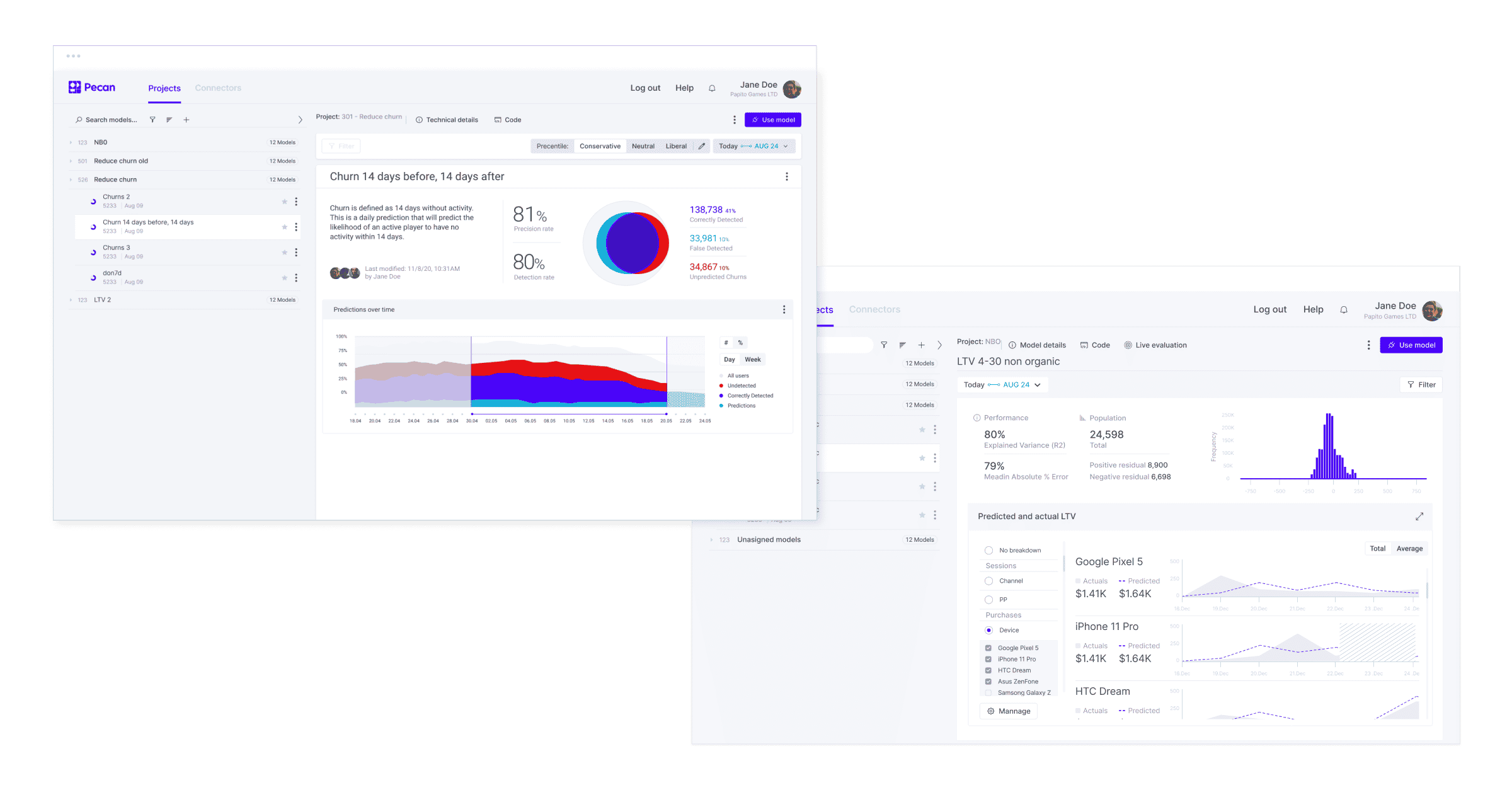

Today’s business people are used to convenient UIs and self-serve products. That expectation means that a core part of in-house AI is building an adequate visualization of predictions from the ground up.

To introduce just a first level of difficulty: Unlike business intelligence, predictive analytics requires more than stacking past events in bar charts. It requires inventing a way to view the future and the present while time is passing. You’ll want to compare predicted results to actual historical outcomes and visualize the delta. And that’s just the beginning.

The fundamental flaw in choosing to build in-house predictive analytics over buying software is that building software means testing, shipping, and maintaining code. Some product leaders use the concept of “build tokens.” That approach means that, for example, the business may only request 3 tools built internally for operations, analytics or other non-customer-facing needs.

Ultimately, our opinion is that fast and ongoing value from data science is only possible with automation and ongoing optimization. We package up that approach — and put a bow on it, in a low-code platform.

7. Translating Results to Executives

If you have no data scientists, you’re introducing a new field to your board with results that can be hard to translate. Many of the aforementioned spokes in the umbrella of AI can take months, or years, to produce results.

In a world of growing, shifting tech stacks, analytics and technology leaders know the pain of bringing new initiatives to their boards with hazy or lackluster results. No one wants to say yet again, “We just need more time.”

Tried-and-True Use Cases Lock in ROI

A platform may not make sense for every AI initiative. At Pecan, we’re distinctly aware of these costs because they’re a roadmap for ways we can automate away inefficiency, wasted time, and misaligned goals that lead to dead-end projects. These issues are why 75% of AI projects underwhelm.

There are use cases proven to driven value with predictive analytics. Predicting and preventing customer churn before it happens. Forecasting demand to keep stores, fulfillment centers and manufacturing stocked at equilibrium. Identifying those likely to convert, whether to cross-sell, upsell, or simply maximize lifetime value.

For some specialized projects, or at giant tech companies, building a data science department to create lots of custom applications can make sense. But for companies whose offerings are not the productization of data, remember to keep the caveats of building data science in-house in mind.

If you want to assess your predictive readiness, request a use case consultation and we can help point you on the right path.